test_batch_size = 32

test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=test_batch_size,

shuffle=False, collate_fn=collate_fn_style_test,

num_workers=0)import torch

torch.cuda.is_available()

먼저 GPU사용가능한지 check해주시고, (cpu로도 가능하지만 느림..)

import os

import pdb

import argparse

from dataclasses import dataclass, field

from typing import Optional

from collections import defaultdict

import torch

from torch.nn.utils.rnn import pad_sequence

import numpy as np

from tqdm import tqdm, trange

from transformers import (

BertForSequenceClassification,

BertTokenizer,

AutoConfig,

AdamW

)트렌스포머 모델 import 등 합니다.

def make_id_file(task, tokenizer):

def make_data_strings(file_name):

data_strings = []

with open(os.path.join('C:\\Users\\Happy\\Desktop\\자연어처리\\pr1_data', file_name), 'r', encoding='utf-8') as f:

id_file_data = [tokenizer.encode(line.lower()) for line in f.readlines()]

for item in id_file_data:

data_strings.append(' '.join([str(k) for k in item]))

return data_strings

print('it will take some times...')

train_pos = make_data_strings('sentiment.train.1')

train_neg = make_data_strings('sentiment.train.0')

dev_pos = make_data_strings('sentiment.dev.1')

dev_neg = make_data_strings('sentiment.dev.0')

print('make id file finished!')

return train_pos, train_neg, dev_pos, dev_neg이건 자연어 처리 모델데이터들입니다. 이부분 가공은 파일 형식에 따라서 달라지겠죠

from transformers import (

RobertaForSequenceClassification,

RobertaTokenizer,

AdamW

)

model = RobertaForSequenceClassification.from_pretrained('roberta-base') # 긍부정이기떄문에 해당 모델 사용

# tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

# tokenizer = BertTokenizer.from_pretrained('albert-base-v2')

# tokenizer = AlbertTokenizer.from_pretrained('albert-base-v2')

tokenizer = RobertaTokenizer.from_pretrained('roberta-base')

# tokenizer = BertTokenizer.from_pretrained('roberta-base')여러 모델중 로보타 사전학습 모델을 사용하겠습니다.

토크나이저는 사전학습된 모델의 토크나이저가 가장 좋으니 사전학습된 모델의 토크나이저를 이용합니다.

train_pos, train_neg, dev_pos, dev_neg = make_id_file('yelp', tokenizer)토큰화 실행~

class SentimentDataset(object): # 데이터 셋 초기 세팅

def __init__(self, tokenizer, pos, neg):

self.tokenizer = tokenizer

self.data = []

self.label = []

for pos_sent in pos:

self.data += [self._cast_to_int(pos_sent.strip().split())]

self.label += [[1]]

for neg_sent in neg:

self.data += [self._cast_to_int(neg_sent.strip().split())]

self.label += [[0]]

def _cast_to_int(self, sample):

return [int(word_id) for word_id in sample]

def __len__(self):

return len(self.data)

def __getitem__(self, index):

sample = self.data[index] # 여기의 인덱스는 데이터 로더 쓸 때 사용됨 => 셔플 트루에서 인덱스에서.

#모델은 기본적으로 동일한 길이의 input 만 받을 수 있음.

return np.array(sample), np.array(self.label[index])

train_dataset = SentimentDataset(tokenizer, train_pos, train_neg)

dev_dataset = SentimentDataset(tokenizer, dev_pos, dev_neg)데이터셋을 class를 이용해서 만들어주었습니다.

def collate_fn_style(samples):

input_ids, labels = zip(*samples)

max_len = max(len(input_id) for input_id in input_ids) # 최대의 배치 사이트.

# sorted_indices = np.argsort([len(input_id) for input_id in input_ids])[::-1] # 길이별로 정리를 해준다..?

input_ids = pad_sequence([torch.tensor(input_id) for input_id in input_ids],batch_first=True) # 패드 시퀀스란... =>

attention_mask = torch.tensor([[1] * len(input_id) + [0] * (max_len - len(input_id)) for input_id in input_ids]) # 어텐션 마스크로 불필요한 곳에선 학습을 하지 않도록함.

token_type_ids = torch.tensor([[0] * len(input_id) for input_id in input_ids]) #

position_ids = torch.tensor([list(range(len(input_id))) for input_id in input_ids])

labels = torch.tensor(np.stack(labels, axis=0))

return input_ids, attention_mask, token_type_ids, position_ids, labelstrain_batch_size=32

eval_batch_size=64

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=train_batch_size,

shuffle=True, collate_fn=collate_fn_style,

pin_memory=True,

num_workers=0 # GPU몇개 할당할 것인지

)

dev_loader = torch.utils.data.DataLoader(dev_dataset, batch_size=eval_batch_size,

shuffle=False, collate_fn=collate_fn_style,

num_workers=0)모델 학습 시작합니다.

하이퍼 파라미터는 맞게 조정해주시고

gpu갯수는 캐글이면 어려개 가능합니다.

# random seed

random_seed=42

np.random.seed(random_seed)

torch.manual_seed(random_seed)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# model = BertForSequenceClassification.from_pretrained('bert-base-uncased') # 긍부정이기떄문에 해당 모델 사용

model.to(device) # 모델을 GPU에 얹어줌,.랜덤 시드를 생성해서 학습.

model.train()

learning_rate = 0.00001

optimizer = AdamW(model.parameters(), lr=learning_rate)

def compute_acc(predictions, target_labels):

return (np.array(predictions) == np.array(target_labels)).mean()

train_epoch = 3

lowest_valid_loss = 9999.3에폭만 해도 자연어처리는 오래걸리더라구요,

# Log in to your W&B account

import wandb

wandb.login() # 072229402c3c9052cda8428fd9cbc7f3429aea4a

wandb_project = "joge"

wandb_team = "goorm-team6"

model_name = "robertclass"

# model_name = "t5-small"

wandb.config = {

"learning_rate": learning_rate,

"epochs": train_epoch,

"batch_size": train_batch_size

}

wandb.init(project= wandb_project, name=model_name , entity= wandb_team)완드비입니다. 자연어 처리과정에서 저희가 사용했던 팀이고요. 이용하면 학습속도등 여러가지 지표들을 한눈에 비교할 수 있어 좋습니다.

train_acc = []

train_loss = []

valid_acc = []

valid_loss = []

cur_train_loss = []

cur_train_acc = []

report_to ="wandb"

for epoch in range(train_epoch):

with tqdm(train_loader, unit="batch") as tepoch:

for iteration, (input_ids, attention_mask, token_type_ids, position_ids, labels) in enumerate(tepoch):

tepoch.set_description(f"Epoch {epoch}")

input_ids = input_ids.to(device)

attention_mask = attention_mask.to(device)

token_type_ids = token_type_ids.to(device)

position_ids = position_ids.to(device)

labels = labels.to(device, dtype=torch.long)

optimizer.zero_grad()

output = model(input_ids=input_ids,

attention_mask=attention_mask,

token_type_ids=token_type_ids,

position_ids=position_ids,

labels=labels)

loss = output.loss

logits = output.logits

batch_predictions = [0 if example[0] > example[1] else 1 for example in logits]

batch_labels = [int(example) for example in labels]

acc = compute_acc(batch_predictions, batch_labels)

loss.backward()

optimizer.step()

cur_train_loss.append(loss.item())

cur_train_acc.append(acc)

tepoch.set_postfix(loss=loss.item())

if iteration != 0 and iteration % int(len(train_loader) / 5) == 0:

# Evaluate the model five times per epoch

with torch.no_grad():

model.eval()

cur_valid_loss = []

cur_valid_acc = []

for input_ids, attention_mask, token_type_ids, position_ids, labels in tqdm(dev_loader,

desc='Eval',

position=1,

leave=None):

input_ids = input_ids.to(device)

attention_mask = attention_mask.to(device)

token_type_ids = token_type_ids.to(device)

position_ids = position_ids.to(device)

labels = labels.to(device, dtype=torch.long)

output = model(input_ids=input_ids,

attention_mask=attention_mask,

token_type_ids=token_type_ids,

position_ids=position_ids,

labels=labels)

logits = output.logits

loss = output.loss

batch_predictions = [0 if example[0] > example[1] else 1 for example in logits]

batch_labels = [int(example) for example in labels]

cur_valid_loss.append(loss.item())

cur_valid_acc.append(compute_acc(batch_predictions, batch_labels))

#acc,loss

mean_train_acc = sum(cur_train_acc) / len(cur_train_acc)

mean_train_loss = sum(cur_train_loss) / len(cur_train_loss)

mean_valid_acc = sum(cur_valid_acc) / len(cur_valid_acc)

mean_valid_loss = sum(cur_valid_loss) / len(cur_valid_loss)

train_acc.append(mean_train_acc)

train_loss.append(mean_train_loss)

valid_acc.append(mean_valid_acc)

valid_loss.append(mean_valid_loss)

cur_train_acc = []

cur_train_loss = []

#wandb

wandb.log({

"Train Loss": mean_train_loss,

"Train Accuracy": mean_train_acc,

"Valid Loss" : mean_valid_loss,

"Valid Accuracy" : mean_valid_acc

})

if lowest_valid_loss > mean_valid_loss:

lowest_valid_loss = mean_valid_loss

print('Acc for model which have lower valid loss: ', mean_valid_acc)

torch.save(model.state_dict(), "./pytorch_model.bin")

wandb.finish()완드비와 섞어서 실행합니다.

torch.save(model, f'./model_roBert_joge.pt')모델 한번 저장해주시고.

def make_id_file_test(tokenizer, test_dataset):

data_strings = []

id_file_data = [tokenizer.encode(sent.lower()) for sent in test_dataset]

for item in id_file_data:

data_strings.append(' '.join([str(k) for k in item]))

return data_strings

test = make_id_file_test(tokenizer, test_dataset)테스트셋도 똑같이 설정

class SentimentTestDataset(object):

def __init__(self, tokenizer, test):

self.tokenizer = tokenizer

self.data = []

for sent in test:

self.data += [self._cast_to_int(sent.strip().split())]

def _cast_to_int(self, sample):

return [int(word_id) for word_id in sample]

def __len__(self):

return len(self.data)

def __getitem__(self, index):

sample = self.data[index]

return np.array(sample)

test_dataset = SentimentTestDataset(tokenizer, test)def collate_fn_style_test(samples):

input_ids = samples

max_len = max(len(input_id) for input_id in input_ids)

# sorted_indices = np.argsort([len(input_id) for input_id in input_ids])[::-1] # 트레인에선 인풋과 라벨을 가지고 있었지만 여기서는 그렇지 않다.

# 테스트에선 레이블이 없기 때문에.. 블라인드로 계산이 된다.. 즉 레이블이 없는 상태에서 순서를 바꾸어 주게 되는것, 이러면 문제가 있음. .....

input_ids = pad_sequence([torch.tensor(input_id) for input_id in input_ids],

batch_first=True)

attention_mask = torch.tensor(

[[1] * len(input_id) + [0] * (max_len - len(input_id)) for input_id in

input_ids])

token_type_ids = torch.tensor([[0] * len(input_id) for input_id in input_ids])

position_ids = torch.tensor([list(range(len(input_id))) for input_id in input_ids])

return input_ids, attention_mask, token_type_ids, position_idswith torch.no_grad():

model.eval()

predictions = []

for input_ids, attention_mask, token_type_ids, position_ids in tqdm(test_loader,

desc='Test',

position=1,

leave=None):

input_ids = input_ids.to(device)

attention_mask = attention_mask.to(device)

token_type_ids = token_type_ids.to(device)

position_ids = position_ids.to(device)

output = model(input_ids=input_ids,

attention_mask=attention_mask,

token_type_ids=token_type_ids,

position_ids=position_ids)

logits = output.logits

batch_predictions = [0 if example[0] > example[1] else 1 for example in logits]

predictions += batch_predictionsno_grad를 사용하면 1에폭당 데이터를 저장하지 않습니다. 메모리를 아낄 수 있죠

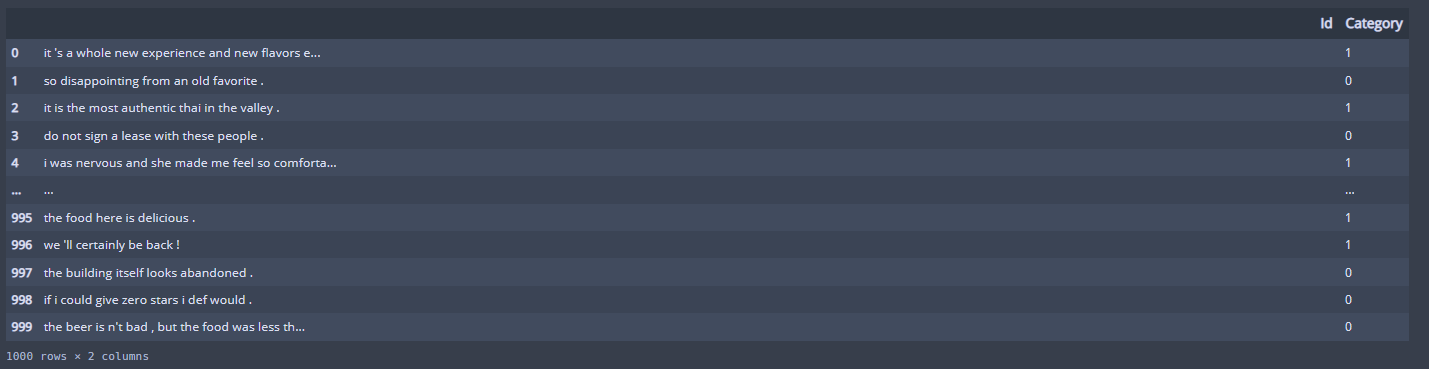

test_df['Category'] = predictions

test_df.to_csv('submission_robert.csv', index=False)output값을 저장해 봅니다^^

카테고리에 긍부정 판단이 되어있습니다.

KAIST & groom의 자연어 처리 전문가 양성과정에서 제가 첫번쨰 프로젝트 담당한 robota_base긍부정 판단입니다.

'IT - 코딩 > AI, 예측모델' 카테고리의 다른 글

| 자연어 처리 _ 키워드 추출 key bert ) with python & pytorch (3) | 2023.01.03 |

|---|---|

| 자연어 처리 _ 구문 속 질의응답 모델(QA) with python & pytorch (코랩 pro 사용) (0) | 2023.01.03 |

| 딥러닝을 응용한 환율예측으로 가상화폐 차익거래 기회 백테스팅 (2) 수익율 측정 (2) | 2022.09.08 |

| 딥러닝을 응용한 환율예측으로 가상화폐 차익거래 기회 백테스팅 (1) (1) | 2022.09.08 |

| DNN을 이용한 분류모델 (with python) (1) | 2022.08.21 |